During the construction of the embedding model, we employed a phased training strategy to progressively improve model performance and successfully developed the powerful doubao-embedding-vision model. The entire training process consists of three core stages.

在 embedding 模型的构建过程中,我们使用了分阶段训练策略,逐步提升模型性能,最终成功塑造出功能强大的 doubao-embedding-vision 模型。整个训练流程由三个核心阶段组成。

Training Objectives: The objective of this stage is to endow the model with basic embedding capabilities, transforming the VLM model into one that possesses embedding capabilities.

训练目标: 这一阶段的目标是赋予模型基础的 embedding 能力,将vlm模型转变为具备embedding模型。

Training Strategy: We utilized large-scale pure text data as training data, which includes multi-domain public data collected from the internet and some synthetic data. For public data, we designed sophisticated data cleaning algorithms and filtering rules to remove noise, duplicate content, and irrelevant information, ensuring high-quality data. Synthetic data, on the other hand, is expanded based on specific seed data using large language models, enabling the synthetic data to cover various domain knowledge and topics. During training, each sample is a text pair, and the InfoNCE loss function is used for contrastive learning.

训练策略: 我们使用了大规模纯文本数据作为训练数据,涵盖了从互联网采集的多领域公开数据以及部分合成数据。对于公开数据,我们设计了精巧的数据清洗算法和过滤规则,去除其中的噪声、重复内容以及无关信息,保证数据的高质量。而合成数据则是基于特定的种子数据,借助大语言模型进行扩展,使得合成的数据能够覆盖各类不同的领域知识和话题。训练过程中,每条样本是一个文本对,并采用 infoNce 损失函数进行对比学习。

Training Objectives: Building on the previous stage, the objective is to add multimodal alignment capabilities for text, images, and videos.

训练目标: 在上一阶段的基础上,增加文、图、视频的多模态对齐能力。

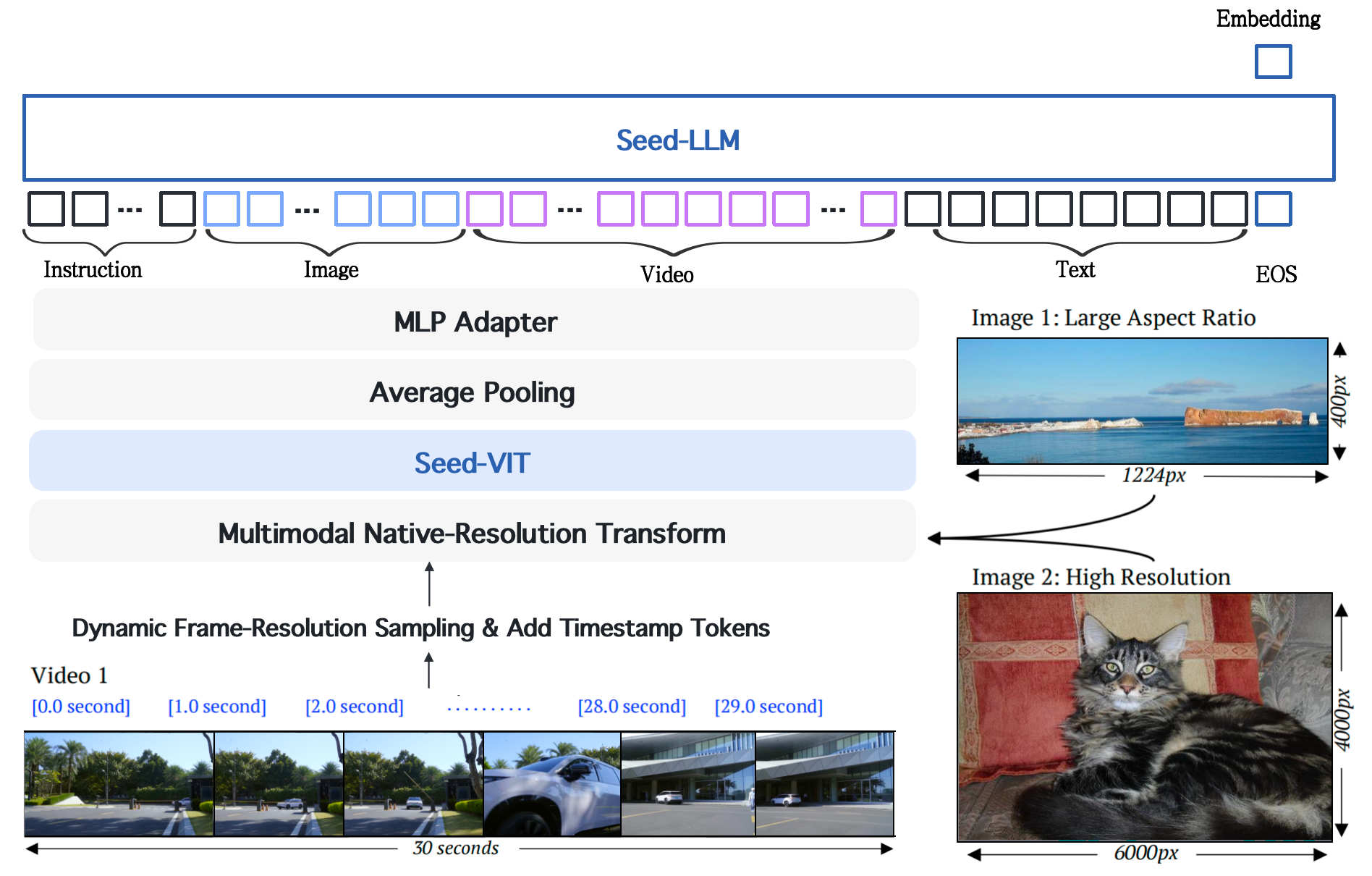

Training Strategy: We collected a large-scale dataset of tens of millions of image-text pairs and video-text pairs for training. A portion of this raw data was sourced from the internet. To ensure data quality, we first conducted rigorous cleaning and filtering of the images, removing those that were blurry, damaged, or low-resolution. Additionally, to construct high-quality image-text pairs, we designed a data production process to obtain accurate and detailed captions from the raw images, ensuring precise semantic alignment between images and text. During training, we again employed the InfoNCE loss function, optimizing the distance between image-text pairs in the vector space to continuously enhance the model's understanding of multimodal data.

训练策略: 我们收集了千万级规模的图文对、视频-文对数据用于训练。这些原始数据一部分采集自互联网,为确保数据质量,首先对其中的图片进行严格的清洗和过滤,剔除模糊、损坏、低分辨率等不合格的图片。同时,为了构建高质量的图文样本对,我们设计了一套数据生产流程,从原始图片中获取准确、详细的 caption,使图文语义实现精准匹配。在训练过程中,同样采用 infoNce 损失函数,通过优化图文对在向量空间中的距离,不断强化模型对多模态数据的理解能力。

Training Objectives: The objective of this stage is to comprehensively improve the model's ability to handle various niche scenarios and complex tasks by introducing data of different forms, modalities, and task types. This will enable the model to better meet the practical application requirements of information retrieval and content classification.

训练目标: 这一阶段的目标是通过引入不同形式、模态以及任务类型的数据,全面提升模型在各类细分场景和复杂任务下的处理能力,使其能够更好地适配信息检索、内容分类等实际应用需求。

Training Strategy: We systematically constructed a high-quality fine-tuning dataset by focusing on three key dimensions: task type, input data modality, and task scenario. On one hand, we referenced the task types and data structures of publicly available benchmark datasets. On the other hand, we closely integrated the actual business needs and extensive experience of ByteEngine to create dozens of datasets for different tasks. For each dataset, we designed specific instructions tailored to its characteristics and scenario requirements, guiding the model to learn the logic of handling specific tasks and to develop a certain level of generalization ability. For scenarios and tasks with limited training data, we applied data augmentation and synthesis techniques to expand the data scale. For more challenging tasks with poor training outcomes, we targeted the mining of negative samples at different difficulty levels to improve the model's performance in complex tasks. Finally, we conducted mixed training on all datasets, iterating through multiple rounds of optimization. This process enabled the Doubao-Embedding-Vision model to demonstrate strong generalization capabilities and excellent performance across various niche scenarios.

训练策略: 我们从任务类型、输入数据模态、任务场景三个关键维度出发,系统性地构建高质量的微调数据集。一方面参考公开评测集的任务类型和数据结构,另一方面紧密结合火山引擎的实际业务需求与丰富经验,构建了数十个不同任务的数据集。针对每个数据集的特点和场景需求,我们设计了专属的指令,以引导模型学习特定任务的处理逻辑,并具备一定的泛化能力。对于部分训练数据匮乏的场景和任务,我们运用数据增强和合成技术,扩充数据规模;对于难度较高,训练效果不佳的任务,定向挖掘不同难度层次的负样本,以此提升模型在复杂任务下的表现。最后,将所有数据集进行混合训练,经过多轮迭代优化,使得 doubao-embedding-vision 模型在不同的细分场景下都展现出强大的泛化能力和优异的性能表现。